This post first appeared in the San Jose Mercury News

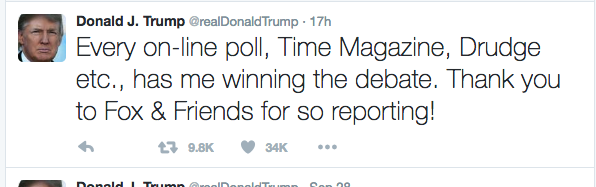

At a Florida rally the day after the presidential debate, Donald Trump said that “almost every single poll had us winning the debate against crooked Hillary Clinton, big league.” But notwithstanding Trump’s charge about Clinton’s honesty, these online polls themselves were a bit “crooked,” or at the very least unscientific and unreliable. The scientific polls that came out a couple of days later all showed that most people felt Clinton won the debate.

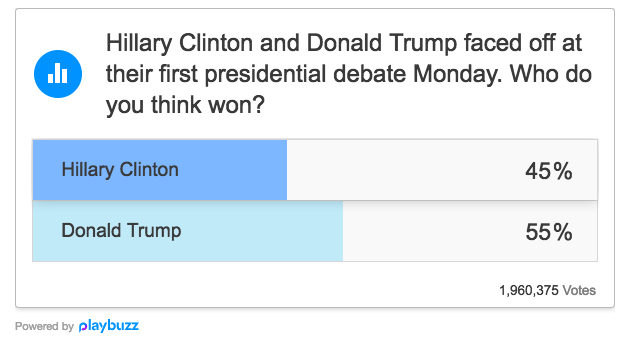

Trump was talking about opt-in online polls, which means that anyone who knew where to go to register their opinion was able to weigh-in. While that’s one way for people to express themselves, it is by no-means a scientific view of the electorate. As Time.com disclosed with its poll results, which had Trump leading Clinton 55 percent to 45 percent, “Online reader polls like this one are not statistically representative of likely voters, and are not predictive of how the debate outcome will affect the election. They are a measure, however imprecise, of which candidates have the most energized online supporters, or most social media savvy fan base.”

Trump was talking about opt-in online polls, which means that anyone who knew where to go to register their opinion was able to weigh-in. While that’s one way for people to express themselves, it is by no-means a scientific view of the electorate. As Time.com disclosed with its poll results, which had Trump leading Clinton 55 percent to 45 percent, “Online reader polls like this one are not statistically representative of likely voters, and are not predictive of how the debate outcome will affect the election. They are a measure, however imprecise, of which candidates have the most energized online supporters, or most social media savvy fan base.”

CBNC, which showed Trump winning by 67 percent to 33 percent, also admitted that the results of its “informal poll … are not scientific and may not necessarily reflect the opinions of the public as a whole.”

The online poll at Drudge.com – a conservative news website, had Trump ahead by 82 percent to 18 percent and there was no evident disclosure about its unscientific methodology.

Even Time’s disclosure that these polls reflect which candidates have “the most energized online supporters, or most social media savvy fan base” may be misleading. There are published reports that people on the Reddit and 4-Chan messaging boards were encouraging Trump supporters to respond to these polls. And while it’s true that Trump does have many energized online supporters, such a concerted effort to drive them to online polls could result in their being over represented even beyond what you’d expect from such enthusiastic supporters.

Also, some of these polls allow you to vote as many times as you want, making it easy for a partisan group to skew the results.

I’ll leave it to political pundits to comment on who really won the debate, but as a former survey researcher who once taught university classes on research methodology, I do feel qualified to inject some skepticism about any online opt-in poll.

The problem isn’t that these polls were conducted online. There are many legitimate research organizations that use the internet to gather scientifically valid opinion data from respondents that are representative of the population being studied. But most of the polls cited immediately after the debate didn’t employ even the most basic sampling methodology to assure that the results were reflective of the population they sampled.

Although there are numerous sampling methodologies available to serious pollsters, the good ones all strive to approximate the results that would be achieved if it were possible to ask every affected person. Of course, it’s not possible to ask everyone who watched the debate who they thought won, but it is possible to carefully select a sample of debate watchers that represents the total population. And depending on the sample size and the methodology, get a pretty good approximation (with some margin of error) of the answers you would have gotten if you had asked everyone.

That’s the way most legitimate polls and surveys work. And, while none are perfect and all are subject to errors and inaccuracies, the ones that pay careful attention to proper sampling generally give a pretty good snapshot of the opinions – at that moment in time — of whatever group is being sampled.

Of course, it’s important to know the population being sampled. A sample of Americans isn’t the same as a sample of registered voters and that sample isn’t the same as likely voters. And while relatively small numbers – sometimes only a few hundred – can often give a pretty good representation of a large population, there are traps to avoid. For example, if you poll 500 Americans and only 6 percent of that sample are African-American women, the sample size of that subgroup is likely too small to make any generalizations, which is why it’s sometimes necessary to oversample certain groups.

But – whatever the group and regardless of the number of respondents – an opt-in survey where people can self-select into the sample, is fraught with potential bias.

I don’t spend a lot of time dissecting political polls, but I do look at a lot of surveys regarding cyberbullying and other risky online behaviors and have found numerous examples of polls that were either poorly done or inaccurately reported.

In 2014 I wrote a post for the journalism site Poynter.org, (www.larrysworld.com/surveys) where I cautioned fellow journalists to “Beware sloppiness when reporting on surveys.” One example I gave was a much-cited study that found that 69 percent of youth had been subjected to cyberbullying, based on a sample of nearly 11,000 young people between 13 and 22. A lot of people who quoted that study were impressed by the sample size, but what they might not have realized was that the survey was opt-in and accessible from the webpage of a U.K.-based anti-bullying organization which, by its very nature, attracted people who were affected by bullying. It was like asking people in an dermatologist’s waiting room about whether they have acne and using the results to predict the acne rate for the entire population.

One of my favorite quotes is “figures don’t lie, but liars figure,” and while not everyone who conducts or reports on a flawed poll is intentionally misleading, there is good reason to be skeptical of what they’re saying.